1. Introduction

In 1980 Stuart Samuel gave what I consider to be one of the most elegant exact solutions of the 2D Ising model. He used Grassmann variables to formulate the problem in terms of a free-fermion model, via the fermionic path integral approach. The relevant Grassmann action is quadratic, so that the solution can be found via well-known formulas for Gaussian integrals.

In previous articles, I derived Onsager’s celebrated expression for the partition function of the 2D Ising model, using two different methods. First, I used a combinatorial method of graph counting that exploits the theory of random walks (see here). In the second article (see here), I reviewed the method of Schultz, Mattis and Lieb of treating the 2D Ising model as a problem of many fermions. Here I further explore the connection between the Ising model and fermions.

This article is based on my study notes. I more or less have followed Samuel’s solution of the 2D Ising model using Grassmann variables, but I had expanded the calculations for my own convenience.

Whereas on the one hand the behavior of bosons can be described using standard path integrals, known as bosonic path integrals, on the other hand the path integral formulation for fermions requires the use of Grassmann variables. Grassmann numbers can be integrated, but not using the standard approach based on Riemann sums and Lebesgue integrals, etc. Instead, they must be integrated using Berezin integration. I have previously written about Grassmann variables and Berezin integrals here.

In what follows, I assume familiarity with the 2D Ising model. Readers who find it difficult to understand the details are referred to the two previously mentioned articles, which are introductory and easier to understand.

The partition function can be approached as a power series either in a high temperature variable or a low temperature one. Consider first the high temperature expansion. It is well known that the partition function of the 2D Ising model is proportional to the generating function of connected or disconnected graphs in which all nodes have even degree, and edges link only nearest neighbors on the infinite square lattice. Graph nodes correspond to sites and links correspond to bonds. In such graphs, every incoming bond at a site has at least one corresponding outgoing bond, because each site has an even number of bonds. To each such “closed graph,” there are corresponding directed graphs, where the links are directed. Since each bond appears at most once in any such directed graph, but never twice or more, we can enumerate such closed directed graphs by assigning pairs of Grassmann variables to each bond. In this case, the generating function, when evaluated in a suitable high temperature variable (such as  ), gives the Ising model partition function up to a known prefactor.

), gives the Ising model partition function up to a known prefactor.

Here, I do not use the above high temperature expansion, in terms of loops or multipolygons. Instead, I use a low temperature expansion and enumerate non-overlapping magnetic domains, following Samuel’s original work. Specifically, each configuration of the Ising model corresponds to a specific domain wall configuration. So summing over all domain wall configurations is equivalent to a sum over all spin states, excepting for a 2-fold degeneracy since each domain wall configuration corresponds to 2 possible spin configurations.

Since the Ising model on the square lattice is self-dual, the high temperature approach using overlapping multipolygons and the low temperature appproach using non-overlapping multipolygons on the dual lattice are equivalent, of course. Either way, explicit evaluation of a Berezin integral gives the exact solution. Specifically, we assign 4 Grassmann variables to each site of the dual lattice. Equivalently, one can instead think as follows: there are two types of Bloch walls, “vertical” domain walls and “horizontal” walls and each wall segment has two ends. A Bloch wall can thus be represented by Grassmann variables at 2 different sites. A corner of a Bloch domain can be represented by matching a vertical and horizontal variable at a single site. Finally, so-called “monomer” terms consist of 2 horizontal or 2 vertical variables. They represent the absence of a corresponding bond, and also of corners. A single monomer term at a site represents a domain wall that goes straight through a site, but perpendicularly to the monomer and without going around a corner. Two monomer terms at the same site represent a site interior to domain walls, i.e, not on a domain wall. Using the terms corresponding to Bloch walls, corners and monomers, we then construct an action.

2. Overview of the strategy

The solution strategy is as follows. We will exploit 2 properties of Grassmann variables. The first property is anticommutativity, so that the square of a Grassmann variable is zero. This nilpotency property can be exploited to count every domain wall unit (or bond in the high temperature method) no more than once. The second property we will exploit is a feature that is specific to the Berezin integral. Recall that a Berezin multiple integral is nonzero only if the Grassmann variables being integrated exactly match or correspond to the integration variables. If there is a single integration variable that is not matched, the whole multiple integral vanishes, because  for the Berezin integral of any Grassmann variable

for the Berezin integral of any Grassmann variable  . From now onwards, we will say that the integrand “saturates” the Berezin integral if all integration variables are matched by integrand variables. So the second property is the ability of the multiple Berezin integral to select only terms that saturate the integral.

. From now onwards, we will say that the integrand “saturates” the Berezin integral if all integration variables are matched by integrand variables. So the second property is the ability of the multiple Berezin integral to select only terms that saturate the integral.

The essence of the trick is to exponentiate the suitably chosen action. The Taylor series for a function of a finite number of Grassmann variables is a polynomial (because of the nilpotency). This polynomial can code all kinds of graphs and other strange groupings of parts of graphs, such as isolated corners or monomers. By Berezin integration, we can then select only the terms that saturate the integral. If the action is chosen appropriately, the saturating terms are precisely those that correspond to the non-overalapping Bloch domains. (In the high temperature variant, the action instead generates the desired multipolygons.)

It will turn out that for the 2D Ising model this action is a quadratic form, so that we essentially have a free-fermion model. Specifically, the quadratic action is partially “diagonalized” by the Fourier transform, immediately leading to the exact solution originally found by Onsager. Of all the methods of solution of the Ising model, I find this method to be the most simple, beautiful and powerful.

What I also found quite fascinating is that for the cubic lattice one obtains a quartic action instead, as is well known to experts in the field. So, in this sense, the cubic Ising model is not directly equivalent to a free-fermion model, but rather to a model of interacting fermions.

3. The quadratic Grassmann action

We assume the reader is familiar with the Ising model on the square lattice. Let the Boltzmann weight  be our chosen low temperature variable. Then we can write the partition function as

be our chosen low temperature variable. Then we can write the partition function as

where  is the Ising model Hamiltonian and the sum is over all spin states. The choice of symbol

is the Ising model Hamiltonian and the sum is over all spin states. The choice of symbol  for the partition funtion was made so that the letter

for the partition funtion was made so that the letter  can be reserved for the partition function per site in the thermodynamic limit, to be defined further below.

can be reserved for the partition function per site in the thermodynamic limit, to be defined further below.

For a given spin configuration, let us consider the set of all bonds in the excited state, i.e. with antiparallel spins. These excited bonds form the Bloch walls separating domains of opposiite magnetization. On the dual lattice, these Bloch walls form non-overlapping loops or polygons. Moreover, every Bloch wall configuration corresponds to exactly 2 spin configurations, so that we can rewrite the partition function as

Here, the factor  is due to the Bloch walls and

is due to the Bloch walls and  is the number of bonds inside the Bloch domains. We thus see the partition function can be calculated by ennumerating non-overlapping loops and summing them with proper Boltzmann weights.

is the number of bonds inside the Bloch domains. We thus see the partition function can be calculated by ennumerating non-overlapping loops and summing them with proper Boltzmann weights.

Let us define a modified partition function for these loops by

so that  . Our goal henceforth is thus to calculate

. Our goal henceforth is thus to calculate  . To do so, we will use Grassmann numbers and Berezin integration.

. To do so, we will use Grassmann numbers and Berezin integration.

Let us define at each site  of the 2D lattice a pair of Grassmann variables in the vertical direction,

of the 2D lattice a pair of Grassmann variables in the vertical direction,  and another pair for the horizontal direction

and another pair for the horizontal direction  .

.

We can now define an action for our fermionic path integral as follows. Each configuration of nonoverlapping loops consists of (i) individual segments of loops that link neighboring sites on the dual lattice (Bloch wall units), (ii) sites where the domain wall goes through the site and (iii) sites where the Bloch domain cuts a corner. We will thus write the total action as a sum of 3 terms: (i) the Bloch wall or “line” term  , (ii) the “monomer” term

, (ii) the “monomer” term  and (iii) the corner term

and (iii) the corner term  :

:

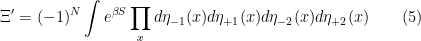

We will then exponentiate this action and use a Berezin integral to obtain  :

:

We will use the same convention used by Samuel, so

Let us define the Bloch wall terms by

![\displaystyle S_L= t^2 \sum_x[ \eta_{+1}(x) \eta_{-1}(x +\hat 1) + \eta_{+2}(x) \eta_{-2}(x +\hat 2) ] ~. \ \ \ \ \ (7)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+S_L%3D+t%5E2+%5Csum_x%5B+%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-1%7D%28x+%2B%5Chat+1%29+%2B+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-2%7D%28x+%2B%5Chat+2%29+%5D+%7E.+%5C+%5C+%5C+%5C+%5C+%287%29&bg=ffffff&fg=000000&s=0&c=20201002)

To remain consistent with this definition the corner term must be defined as

![\displaystyle S_C = \sum_x[ \eta_{+1}(x) \eta_{-2}(x) + \eta_{+2}(x) \eta_{-1}(x) + \eta_{+2}(x) \eta_{+1}(x) + \eta_{-2}(x) \eta_{-1}(x) ] ~. \ \ \ \ \ (8)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+S_C+%3D+%5Csum_x%5B+%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-2%7D%28x%29+%2B+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%2B+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%2B+%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5D+%7E.+%5C+%5C+%5C+%5C+%5C+%288%29&bg=ffffff&fg=000000&s=0&c=20201002)

To see why, consider a corner formed along a path going horizontally forward followed by vertically forward. You thus have 2 Bloch walls segments meeting at the corner. We want to saturate first the horizontal wall, then the vertical. The horizontal wall contributes with  and the vertical wall with

and the vertical wall with  . So we want to saturate the Berezin integral at the site

. So we want to saturate the Berezin integral at the site  with the corner factor

with the corner factor  . This is the first term in the corner action. The 3 other terms are similarly deduced.

. This is the first term in the corner action. The 3 other terms are similarly deduced.

Meanwhile, the monomer terms are even simpler. We want the monomer terms to “do nothing”, i.e. to contribute with a factor of 1 when (and only when) needed. From the sign convention (6) we thus obtain simply

![\displaystyle S_M= \sum_x[ \eta_{-1}(x) \eta_{+1}(x) + \eta_{-2}(x) \eta_{+2}(x) ] ~ . \ \ \ \ \ (9)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+S_M%3D+%5Csum_x%5B+%5Ceta_%7B-1%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%2B+%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B%2B2%7D%28x%29+%5D+%7E+.+%5C+%5C+%5C+%5C+%5C+%289%29&bg=ffffff&fg=000000&s=0&c=20201002)

Note that corner and monomer terms have an even number of Grassmann variables per site, while the line term has only one Grassmann variable on each of two neighboring sites. So to saturate the Berezin integral, an even number of line terms (so 0,2, or 4) must come together at a given site.

The Berezin integral for a fixed site  can only saturate in the following ways:

can only saturate in the following ways:

- Two monomer terms, one horizontal and one vertical.

- Two lines and a monomer.

- Two lines and a corner.

- Four lines.

The following are prohibited at any site:

- An odd number of lines (because there is no way to saturate missing Grassmann variables).

- One corner and one monomer (because one Grassmann variable will necessarily be repeated, so that the nilpotency kills the term, and similarly one variable will be missing, also leading to zero).

- A double corner of 4 domain walls and 2 corners (because then you have 6 Grassmann variables at the site).

There is one other interesting case: 2 corner terms with no lines. In this case, each Grassmann variable at the site appears exactly once, so such terms do in fact contribute. Moreover, every such term is matched by another term with the two other kinds of corners. For example the two-corner term

![\displaystyle [\eta_{+1}(x) \eta_{-2}(x) ][ \eta_{+2}(x) \eta_{-1}(x) ]](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5B%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-2%7D%28x%29+%5D%5B+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5D+&bg=ffffff&fg=000000&s=0&c=20201002)

is matched by the term

![\displaystyle [\eta_{-2}(x) \eta_{-1}(x) ][ \eta_{+2}(x) \eta_{+1}(x) ] ~.](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5B%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5D%5B+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5D+%7E.+&bg=ffffff&fg=000000&s=0&c=20201002)

But

![\displaystyle \begin{array}{rcl} [\eta_{-2}(x) \eta_{-1}(x) ][ \eta_{+2}(x) \eta_{+1}(x) ] &=& -\eta_{-2}(x) \eta_{+2}(x) \eta_{-1}(x) \eta_{+1}(x) \\ &=& +\eta_{-2}(x) \eta_{+2}(x) \eta_{+1}(x) \eta_{-1}(x) \\ &=& -\eta_{-2}(x) \eta_{+1}(x) \eta_{+2}(x) \eta_{-1}(x) \\ &=& +\eta_{+1}(x) \eta_{-2}(x) \eta_{+2}(x) \eta_{-1}(x) ~. \end{array}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cbegin%7Barray%7D%7Brcl%7D+%5B%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5D%5B+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5D+%26%3D%26+-%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5C%5C+%26%3D%26+%2B%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5C%5C+%26%3D%26+-%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5C%5C+%26%3D%26+%2B%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%7E.+%5Cend%7Barray%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

So the two ways of combining 2 corners lead to double the contribution. But the first double corner is actually the negative of the term with two monomers:

![\displaystyle \begin{array}{rcl} [\eta_{+1}(x) \eta_{-2}(x) ][ \eta_{+2}(x) \eta_{-1}(x) ] &=& \eta_{+2}(x) \eta_{-1}(x) \eta_{+1}(x) \eta_{-2}(x) \\ &=& - \eta_{-1}(x) \eta_{+2}(x) \eta_{+1}(x) \eta_{-2}(x) \\ &=& + \eta_{-1}(x) \eta_{+1}(x) \eta_{+2}(x) \eta_{-2}(x) \\ &=& - \eta_{-1}(x) \eta_{+1}(x) \eta_{-2}(x) \eta_{+2}(x) \end{array}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cbegin%7Barray%7D%7Brcl%7D+%5B%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-2%7D%28x%29+%5D%5B+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5D+%26%3D%26+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-1%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-2%7D%28x%29+%5C%5C+%26%3D%26+-+%5Ceta_%7B-1%7D%28x%29+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-2%7D%28x%29+%5C%5C+%26%3D%26+%2B+%5Ceta_%7B-1%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B%2B2%7D%28x%29+%5Ceta_%7B-2%7D%28x%29+%5C%5C+%26%3D%26+-+%5Ceta_%7B-1%7D%28x%29+%5Ceta_%7B%2B1%7D%28x%29+%5Ceta_%7B-2%7D%28x%29+%5Ceta_%7B%2B2%7D%28x%29+%5Cend%7Barray%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

so that at each site the double monomer term plus the two double corner terms produce a net contribution of  . If there are

. If there are  sites, then the number of sites on the lines is necessarily even, so that the number

sites, then the number of sites on the lines is necessarily even, so that the number  of sites not on the walls satisfies

of sites not on the walls satisfies  . So there is an overall factor of

. So there is an overall factor of  , as seen in (5).

, as seen in (5).

Meanwhile, every domain wall segment has weight  , so that a graph of non-overlappig loops of total loop length

, so that a graph of non-overlappig loops of total loop length  will have a weight of

will have a weight of  . There are many different ways to permute the lines, corners and monomers, but this is cancelled by the factorial in the denominator of the Taylor expansion of the exponential function. The final result is that the right hand sides of (5) and (3) are equal.

. There are many different ways to permute the lines, corners and monomers, but this is cancelled by the factorial in the denominator of the Taylor expansion of the exponential function. The final result is that the right hand sides of (5) and (3) are equal.

4. Diagonalization and exact solution

The quadratic action is translationally invariant, so the Fourier transform will diagonalize it, i.e. in the new Fourier conjugate variable the action is diagonal. By this we mean that the action does not mix different Fourier frequencies, upto sign.

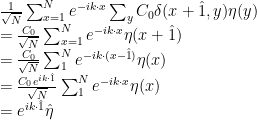

So let us define the (unitary) Fourier transform  of the Grassmann variables

of the Grassmann variables  :

:

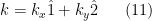

Here  and

and  are 2-dimensional vectors. It will be more convenient for us to write

are 2-dimensional vectors. It will be more convenient for us to write

where

where  and

and  are the number of rows and columns of the lattice and

are the number of rows and columns of the lattice and  (with

(with  and

and  ).

).

We could take  ranging as

ranging as  , but we will instead take

, but we will instead take  and

and  as odd and use negative wavenumbers as follows. Let

as odd and use negative wavenumbers as follows. Let  and

and  . Then,

. Then,

It is easy to check that the  are Grassmann numbers, a fact that follows from the unitarity of the Fourier transform. Unitarity also guarantees that

are Grassmann numbers, a fact that follows from the unitarity of the Fourier transform. Unitarity also guarantees that

so that we can explicitly evaluate the Berezin integral provided we can rewrite the Grassmann action in simple enough form in the Fourier transformed Grassmann variables. This we do next.

Notice that the action is quadratic in the  . Specifically, the action is a quadratic form in the Grassmann variables that we can write as

. Specifically, the action is a quadratic form in the Grassmann variables that we can write as

We will explicitly define  below, but for the moment let us not worry about it, except to note that it is simply a matrix or linear operator kernel. Note that the “matrix”

below, but for the moment let us not worry about it, except to note that it is simply a matrix or linear operator kernel. Note that the “matrix”  is actually a tensor of rank 4, because there are 4 indices:

is actually a tensor of rank 4, because there are 4 indices:  . However, for a fixed pair of not-necessarily-distinct sites

. However, for a fixed pair of not-necessarily-distinct sites  and

and  ,

,  is a genuine (4

is a genuine (4 4) matrix with only two indices. Similarly, for fixed

4) matrix with only two indices. Similarly, for fixed  ,

,  is an

is an  matrix. We will apply the Fourier transform on the site variables,

matrix. We will apply the Fourier transform on the site variables,  , noting that the action has the form of an inner product, and by the unitarity of the Fourier transform, it is possible to rewrite the action as

, noting that the action has the form of an inner product, and by the unitarity of the Fourier transform, it is possible to rewrite the action as

All we have done here is apply Plancherel’s theorem, sometimes also known as Parseval’s theorem. Hatted symbols represent Fourier transformed quantities.

I have previously written about the magical powers of the Fourier transform. For example: (i) Convolutions become products and vice versa under Fourier transformation; (ii) differential operators transform to simple algebraic multipliers; (iii) translations hecome phase shifts. It is this last property which is the most important for our purposes here.

The translationally invariant quadratic action for the 2D Ising model only connects neighboring sites. The only non-diagonal part of the action is due to this shift to neighboring sites. But upon Fourier transformation, we get rid of the shifts and obtain an action which is diagonal in the Fourier transform variable  . In other words,

. In other words,  where

where  is the Kronecker delta function.

is the Kronecker delta function.

Let us introduce more compact notation to make the calculations easier. Let us define the vector

![\displaystyle \eta= \left[\begin{array}{c} \eta_{-1} \\ \eta_{+1} \\ \eta_{-2} \\ \eta_{+2} \end{array} \right] \ \ \ \ \ (15)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Ceta%3D+%5Cleft%5B%5Cbegin%7Barray%7D%7Bc%7D+%5Ceta_%7B-1%7D+%5C%5C+%5Ceta_%7B%2B1%7D+%5C%5C+%5Ceta_%7B-2%7D+%5C%5C+%5Ceta_%7B%2B2%7D+%5Cend%7Barray%7D+%5Cright%5D+%5C+%5C+%5C+%5C+%5C+%2815%29&bg=ffffff&fg=000000&s=0&c=20201002)

and similarly for  . Then we can write

. Then we can write

The superscript  denotes transpose. The matrices

denotes transpose. The matrices  and

and  are given by (8) and (9) and are diagonal in the site indices, i.e. in

are given by (8) and (9) and are diagonal in the site indices, i.e. in  .

.

![\displaystyle \begin{array}{rcl} A_C(x,y)&=& \delta(x,y) \left[ \begin{array}{cccc} 0 & 0 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 1 & 0 & 0 & 0 \\ 1 & 1 & 0 & 0 \end{array} \right] \\ A_L(x,y)&=& \delta(x,y) \left[ \begin{array}{cccc} 0 & 1 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 \\ 0 & 0 & 0 & 0 \end{array} \right] ~, \end{array}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cbegin%7Barray%7D%7Brcl%7D+A_C%28x%2Cy%29%26%3D%26+%5Cdelta%28x%2Cy%29+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+0+%26+0+%26+0+%26+0+%5C%5C+0+%26+0+%26+1+%26+0+%5C%5C+1+%26+0+%26+0+%26+0+%5C%5C+1+%26+1+%26+0+%26+0+%5Cend%7Barray%7D+%5Cright%5D+%5C%5C+A_L%28x%2Cy%29%26%3D%26+%5Cdelta%28x%2Cy%29+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+0+%26+1+%26+0+%26+0+%5C%5C+0+%26+0+%26+0+%26+0+%5C%5C+0+%26+0+%26+0+%26+1+%5C%5C+0+%26+0+%26+0+%26+0+%5Cend%7Barray%7D+%5Cright%5D+%7E%2C+%5Cend%7Barray%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

where  is a Kronecker delta function, not to be confused with the Dirac delta. The matrix

is a Kronecker delta function, not to be confused with the Dirac delta. The matrix  is not diagonal in the site indices because it connects neighboring sites:

is not diagonal in the site indices because it connects neighboring sites:

![\displaystyle \begin{array}{rcl} A_L(x,y)&=& t^2\delta(x+\hat 1,y) \left[ \begin{array}{cccc} 0 & 0 & 0 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \end{array} \right] +t^2 \delta(x+\hat 2,y) \left[ \begin{array}{cccc} 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 1 & 0 \end{array} \right] ~. \end{array}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cbegin%7Barray%7D%7Brcl%7D+A_L%28x%2Cy%29%26%3D%26+t%5E2%5Cdelta%28x%2B%5Chat+1%2Cy%29+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+0+%26+0+%26+0+%26+0+%5C%5C+1+%26+0+%26+0+%26+0+%5C%5C+0+%26+0+%26+0+%26+0+%5C%5C+0+%26+0+%26+0+%26+0+%5Cend%7Barray%7D+%5Cright%5D+%2Bt%5E2+%5Cdelta%28x%2B%5Chat+2%2Cy%29+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+0+%26+0+%26+0+%26+0+%5C%5C+0+%26+0+%26+0+%26+0+%5C%5C+0+%26+0+%26+0+%26+0+%5C%5C+0+%26+0+%26+1+%26+0+%5Cend%7Barray%7D+%5Cright%5D+%7E.+%5Cend%7Barray%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

In terms of  , we can thus write the action as

, we can thus write the action as

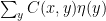

Here  . The reason the sum is now over a single index

. The reason the sum is now over a single index  is that

is that  is translationally invariant, and application of Parseval’s (or Plancherel’s) allows us to convert the site shift to a phase, so that

is translationally invariant, and application of Parseval’s (or Plancherel’s) allows us to convert the site shift to a phase, so that  . In other words,

. In other words,  is diagonal in the Fourier variables such as

is diagonal in the Fourier variables such as  , which is conjugate to the site index

, which is conjugate to the site index  .

.

As an illustrative example, let us show this last point explicitly. Consider the Fourier transform of  , where

, where  is translationaly invariant:

is translationaly invariant:

Hence  is diagonal in the sense previously explained. It is easily calclated to be

is diagonal in the sense previously explained. It is easily calclated to be

![\displaystyle \begin{array}{rcl} B(k,k) &=& \left[ \begin{array}{cccc} ~~0 & 1 & 0 & 0 \\ 0 & ~~0 & 1 & 0 \\ 1 & 0 & ~~0 & 1 \\ 1 & 1 & 0 & ~~0 \end{array} \right] \nonumber \\ & & \quad +t^2 e^{ik\cdot \hat 1} \left[ \begin{array}{cccc} ~~0 & 0 & ~~0 & ~~0 \\ 1 & ~~0 & ~~0 & ~~0 \\ ~~0 & ~~0 & ~~0 & ~~0 \\ ~~0 & ~~0 & ~~0 & ~~0 \end{array} \right] +t^2 e^{ik\cdot \hat 2} \left[ \begin{array}{cccc} ~~0 & ~~0 & ~~0 & ~~0 \\ ~~0 & ~~0 & ~~0 & ~~0 \\ ~~0 & ~~0 & ~~0 & 0 \\ ~~0 & ~~0 & 1 & ~~0 \end{array} \right] \\ &=& \left[ \begin{array}{cccc} ~~0 & 1 & 0 & 0 \\ t^2 e^{ik_x} & ~~0 & 1 & 0 \\ 1 & 0 & ~~0 & 1 \\ 1 & 1 & t^2 e^{ik_y} & ~~0 \end{array} \right] ~. \end{array}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cbegin%7Barray%7D%7Brcl%7D+B%28k%2Ck%29+%26%3D%26+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+%7E%7E0+%26+1+%26+0+%26+0+%5C%5C+0+%26+%7E%7E0+%26+1+%26+0+%5C%5C+1+%26+0+%26+%7E%7E0+%26+1+%5C%5C+1+%26+1+%26+0+%26+%7E%7E0+%5Cend%7Barray%7D+%5Cright%5D+%5Cnonumber+%5C%5C+%26+%26+%5Cquad+%2Bt%5E2+e%5E%7Bik%5Ccdot+%5Chat+1%7D+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+%7E%7E0+%26+0+%26+%7E%7E0+%26+%7E%7E0+%5C%5C+1+%26+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%5C%5C+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%5C%5C+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%5Cend%7Barray%7D+%5Cright%5D+%2Bt%5E2+e%5E%7Bik%5Ccdot+%5Chat+2%7D+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%5C%5C+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%5C%5C+%7E%7E0+%26+%7E%7E0+%26+%7E%7E0+%26+0+%5C%5C+%7E%7E0+%26+%7E%7E0+%26+1+%26+%7E%7E0+%5Cend%7Barray%7D+%5Cright%5D+%5C%5C+%26%3D%26+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+%7E%7E0+%26+1+%26+0+%26+0+%5C%5C+t%5E2+e%5E%7Bik_x%7D+%26+%7E%7E0+%26+1+%26+0+%5C%5C+1+%26+0+%26+%7E%7E0+%26+1+%5C%5C+1+%26+1+%26+t%5E2+e%5E%7Bik_y%7D+%26+%7E%7E0+%5Cend%7Barray%7D+%5Cright%5D+%7E.+%5Cend%7Barray%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

Notice that a daggered variable  (i.e., a conjugate transpose) appears in the action. We need to take care of this. Since

(i.e., a conjugate transpose) appears in the action. We need to take care of this. Since  is, in principle, a “real” Grassmann variable, its Fourier transform satisfies

is, in principle, a “real” Grassmann variable, its Fourier transform satisfies ![{[\hat \eta(k)]^* = \hat \eta(-k)}](https://s0.wp.com/latex.php?latex=%7B%5B%5Chat+%5Ceta%28k%29%5D%5E%2A+%3D+%5Chat+%5Ceta%28-k%29%7D&bg=ffffff&fg=000000&s=0&c=20201002) . This “Hermitian” property of the Fourier transform of real functions allows us to write the action as

. This “Hermitian” property of the Fourier transform of real functions allows us to write the action as

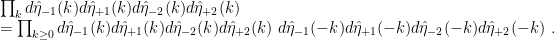

We can explicitly evaluate the needed Berezin integral. But some care is necessary. Observe that the action “mixes” frequencies  and

and  . When we rewrite the exponential of the sum in the above action as a product of exponentials of the summands, these exponentials will contain not only the 4 grassman variables that make up the vector

. When we rewrite the exponential of the sum in the above action as a product of exponentials of the summands, these exponentials will contain not only the 4 grassman variables that make up the vector  , but also the other 4 variables in

, but also the other 4 variables in  . So the full Berezin integral will factor not into Berezin integrals over 4 variables, but over 8 variables. So we will regroup the Grassmann differentials in groups of 8 rather than in groups of four, as follows:

. So the full Berezin integral will factor not into Berezin integrals over 4 variables, but over 8 variables. So we will regroup the Grassmann differentials in groups of 8 rather than in groups of four, as follows:

In order to correctly factor the full Berezin integral, we need to collect all terms with  and

and  , which we can do by rewriting the sum in the action half the values of

, which we can do by rewriting the sum in the action half the values of  . We will abuse the notation and write

. We will abuse the notation and write  to mean that

to mean that  and

and  are not both included in the summation. For instance, we can accomplish this by taking

are not both included in the summation. For instance, we can accomplish this by taking  . With this notation, we can write,

. With this notation, we can write,

If we define

then we can write the action as

Let us write

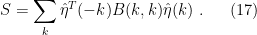

The full Berezin integral now factors and can be written

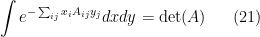

Each of the Berezin integral factors is a Gaussian integral. Recall that

for Grassmann numbers  and

and  and a complex matrix

and a complex matrix  (see here for an explanation). So

(see here for an explanation). So

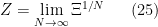

Removing the restriction to  we have

we have

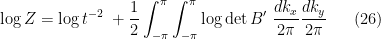

Taking logarithms and dividing by the number of sites  we have

we have

In the thermodynamic limit, the sum becomes an integral and the partition function per site

is thus given by

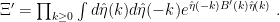

The anti-hermitian matrix  is easily found:

is easily found:

![\displaystyle B'(k)= \left[ \begin{array}{cccc} ~~0 & 1- t^2 e^{-ik_x} & -1 & -1 \\ -(1-t^2 e^{ik_x}) & ~~0 & 1 & -1 \\ 1 & -1 & ~~0 & (1-t^2e^{-ik_y}) \\ 1 & 1 & -(1-t^2e^{ik_y}) & ~~0 \end{array} \right] ~. \ \ \ \ \ (27)](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+B%27%28k%29%3D+%5Cleft%5B+%5Cbegin%7Barray%7D%7Bcccc%7D+%7E%7E0+%26+1-+t%5E2+e%5E%7B-ik_x%7D+%26+-1+%26+-1+%5C%5C+-%281-t%5E2+e%5E%7Bik_x%7D%29+%26+%7E%7E0+%26+1+%26+-1+%5C%5C+1+%26+-1+%26+%7E%7E0+%26+%281-t%5E2e%5E%7B-ik_y%7D%29+%5C%5C+1+%26+1+%26+-%281-t%5E2e%5E%7Bik_y%7D%29+%26+%7E%7E0+%5Cend%7Barray%7D+%5Cright%5D+%7E.+%5C+%5C+%5C+%5C+%5C+%2827%29&bg=ffffff&fg=000000&s=0&c=20201002)

Its determinant is

Substituting into the integral, we arrive at the expression for the partition function per site:

![\displaystyle \begin{array}{rcl} \log Z &=& \log t^{-2}~ + \frac 1 2 \iint_{-\pi}^{\pi} \log \big[(1 + t^4)^2 - 2 t^2 (-1 + t^4) (\cos k_x + \cos k_y)\big] ~ \frac{dk_x}{2\pi} \frac{dk_y}{2\pi} \\ &=& \frac 1 2 \iint_{-\pi}^{\pi} \log \big[(t^{-2} + t^2)^2 - 2 (-t^{-2} + t^2) (\cos k_x + \cos k_y)\big] ~ \frac{dk_x}{2\pi} \frac{dk_y}{2\pi} \\ &=& \frac 1 2 \iint_{-\pi}^{\pi} \log \big[4 \cosh^2 2\beta J - 4 \sinh 2\beta J ~(\cos k_x + \cos k_y)\big] ~ \frac{dk_x}{2\pi} \frac{dk_y}{2\pi} \end{array}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cbegin%7Barray%7D%7Brcl%7D+%5Clog+Z+%26%3D%26+%5Clog+t%5E%7B-2%7D%7E+%2B+%5Cfrac+1+2+%5Ciint_%7B-%5Cpi%7D%5E%7B%5Cpi%7D+%5Clog+%5Cbig%5B%281+%2B+t%5E4%29%5E2+-+2+t%5E2+%28-1+%2B+t%5E4%29+%28%5Ccos+k_x+%2B+%5Ccos+k_y%29%5Cbig%5D+%7E+%5Cfrac%7Bdk_x%7D%7B2%5Cpi%7D+%5Cfrac%7Bdk_y%7D%7B2%5Cpi%7D+%5C%5C+%26%3D%26+%5Cfrac+1+2+%5Ciint_%7B-%5Cpi%7D%5E%7B%5Cpi%7D+%5Clog+%5Cbig%5B%28t%5E%7B-2%7D+%2B+t%5E2%29%5E2+-+2+%28-t%5E%7B-2%7D+%2B+t%5E2%29+%28%5Ccos+k_x+%2B+%5Ccos+k_y%29%5Cbig%5D+%7E+%5Cfrac%7Bdk_x%7D%7B2%5Cpi%7D+%5Cfrac%7Bdk_y%7D%7B2%5Cpi%7D+%5C%5C+%26%3D%26+%5Cfrac+1+2+%5Ciint_%7B-%5Cpi%7D%5E%7B%5Cpi%7D+%5Clog+%5Cbig%5B4+%5Ccosh%5E2+2%5Cbeta+J+-+4+%5Csinh+2%5Cbeta+J+%7E%28%5Ccos+k_x+%2B+%5Ccos+k_y%29%5Cbig%5D+%7E+%5Cfrac%7Bdk_x%7D%7B2%5Cpi%7D+%5Cfrac%7Bdk_y%7D%7B2%5Cpi%7D+%5Cend%7Barray%7D+&bg=ffffff&fg=000000&s=0&c=20201002)

We are done! This is Onsager’s famous result, specialized to the case of of equal coupĺings  .

.

Exercise: Repeat the calculation using the high temperature variable  instead of the low tempoerature variable

instead of the low tempoerature variable  . (The final answer is of course the same.)

. (The final answer is of course the same.)

5. The cubic Ising model

Ever since 1944 when Onsager published his seminal paper, tentative “exact solutions” have been proposed over the years for the 3D or cubic Ising model. As mentioned eariler, the Grassmann action is quartic for the cubic Ising model. In quantum field theory, quartic Grassmann actions are associated with models of interacting fermions, whereas quadratic actions are associated with free-fermion models. The latter are easily solved via Pfaffian and determinant formulas, as we have done above, but at the present time there are no methods known to be able to give exact solutions (in the thermodynamic limit) of lattice models with quartic Grassmann actions. Hence, anybody claiming an exact solution to the cubic Ising model must explain how they overcame the mathematical difficulty of dealing with quartic actions, or at least how the new method bypasses this mathematical obstruction.

Barry Cipra, in an article in Science, referred to the Ising problem as the “Holy Grail of statistical mechanics.” The article lists a number of other reasons why we may never attain the goal of finding an explicit exact solution of the cubic Ising model.

Exact solution of the cubic Ising model may be an impossible problem!

I thank Francisco “Xico” Alexandre da Costa and Sílvio Salinas for calling my attention to the Grassmann variables approach to solving the Ising model.